NEW DELHI

Google’s launch of a suite artificial intelligence (AI) tools, which its researchers reported to be successful at not only suggested a new drug combination for cancer therapy but even stood up to early tests in the laboratory, is a signal that research-scientists ought to be integrating AI into the process of scientific discovery.

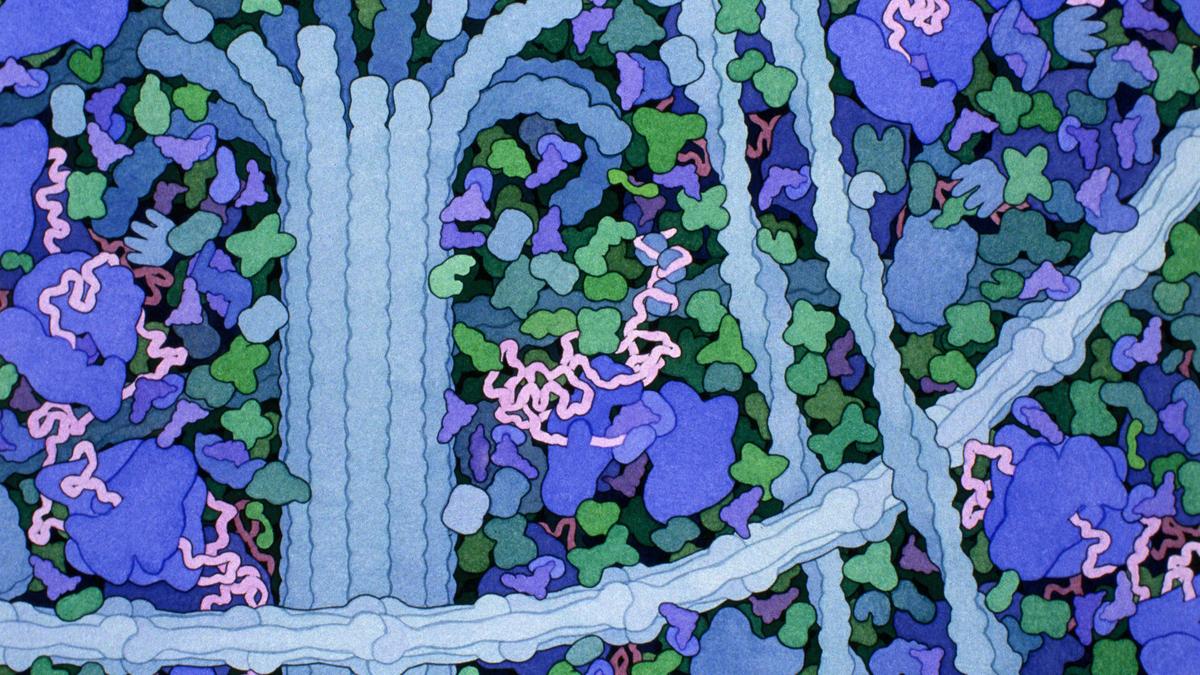

Built on the Gemma family of open models, the Cell2Sentence-Scale 27B (C2S-Scale) is a 27-billion-parameter foundation model designed to “understand” the language of individual cells.

“This announcement marks a milestone for AI in science,” Shekoofeh Azizi and Brian Perozzi, staff scientists at Google DeepMind and Google Research respectively, in a post accompanying the announcement.

“C2S-Scale generated a novel hypothesis about cancer cellular behaviour and we have since confirmed its prediction with experimental validation in living cells. This discovery reveals a promising new pathway for developing therapies to fight cancer,” they added.

A novel use

The C2S-Scale model was trained on a large dataset of real-world patients and cell-line data, based on which it suggested a drug called silmitasertib could be used to improve the immune system’s ability to identify cancerous tumours when they were nascent.

To be sure, silmitasertib (CX-4945) is currently in several clinical trials to treat multiple myeloma, kidney cancer, medulloblastoma, and advanced solid tumours. In January 2017, the US Food and Drug Administration granted it orphan drug status for advanced cholangiocarcinoma.

However, the novelty of Google’s effort was not in (re)discovering the drug but that it had scanned the vast cancer biology literature to suggest a novel use for a drug candidate. Pharmaceutical companies in their usual course spend billions of dollars and employ highly trained personnel to reveal similar insights.

“It’s a nice result and was a well-chosen problem to test the capabilities of an LLM [large language model],” Sunil Laxman, systems biologist at the Institute for Stem Cell Science and Regenerative Medicine, Bengaluru, told The Hindu. “This would, in the usual course, have taken a focussed team of dedicated researchers several months to suggest such a use of the drug.”

‘It’s very good’

Dr. Laxman however underlined that the model hadn’t suggested something that couldn’t have occurred to a trained biologist nor had discovered something new about cancer biology.

“It’s very good. Not great. The average laboratory in India will not have access to a vast library of chemical compounds where they can be tested. This one had, and while it has certainly shortened the time [in this case] for a potential discovery, it isn’t a path-breaking discovery.”

LLMs are at the core of AI and are trained on human-annotated data to understand human language and solve problems.

Drs. Azizi and Perozzi have contended that their results demonstrate that it’s possible to create LLMs that don’t have to be trained on the rules of biological systems. Instead, they wrote, the models can be encouraged to figure the rules out just by having their successes ‘rewarded’ and failures ‘punished’. This is how some of the most powerful chess-playing LLMs were trained.

Good reasoning

Indian Institute of Science, Bengaluru, mathematics professor Siddhartha Gadgil interpreted the findings as significant and reckoned that the best AI models in mathematics were today at the level of a “skilled mathematician but not at genius-human levels”.

He said there were “no reasons” to suppose that AI development would one day not be able to solve the most challenging maths problems.

“We can’t say when an AI model will solve the Riemann Hypothesis but there’s no reason to suppose it never can. Already there are several initiatives and even companies that are tackling unsolved mathematical problems and they do come up with novel hypotheses.”

Dr. Gadgil cited the International Mathematical Olympiad 2025, where OpenAI, the maker of ChatGPT, said an “experimental reasoning model” had figured out answers to the questions, which, if it were a human, would have won it a gold medal. The model followed the same time limits as human participants.

Significantly, this model wasn’t trained for the Olympiad but was a general-purpose reasoning model with sufficiently good reasoning powers.

This said, the Olympiad’s problems aren’t representative examples of the problems professional mathematicians work on; they’re intended for talented high-schoolers and constitute a gold standard test of mathematical talent.

The Riemann hypothesis on the other hand is a problem many mathematicians are working on. It’s a statement about the nature of prime numbers whose proof has eluded mathematicians for over a century. The Clay Mathematical Institute in the US has promised to reward its solver with $1 million.

‘Still divided’

Given the vast literature to which these LLM are exposed, they’re expected to come up with novel and useful ideas about how to approach a mathematical problem an equivalent human expert wouldn’t always immediately see, he added.

Prof. Gadgil, who embraces LLM tools in his own research, added that his field is “still divided” on incorporating them. According to him, there were several good models that “showed no signs of plateauing and had a lot of latent capabilities that still weren’t fully explored”, and therefore ought to be encouraged as tools that a working researcher ought to be incorporating.

Published – October 25, 2025 06:00 am IST