Can you weigh an elephant before and after it picks up a one-rupee coin, and tell the difference? You can if the measurement is precise. To detect a 4-gram coin on a 4-tonne creature, the scales must come with a resolution of at least 1 part per million (ppm). Precise measurements such as these routinely lead to startling discoveries in fundamental physics. This is what the physicist Albert Michelson meant by “The future truths of physical science are to be looked for in the sixth place of decimals”.

This June, the Muon g-2 (pronounced “gee minus two”) Experiment at Fermilab in the US presented its highly anticipated final results. With data collected over three years and with the involvement of more than 170 physicists, the collaboration had measured a unique property of a subatomic particle called a muon with an unprecedented precision of 0.127 ppm, outdoing its stated goal of 0.140 ppm.

Usually, physicists check such measurements against the prediction of the Standard Model, the theory of subatomic particles that predicts their properties. If they don’t match, the measured value would hint at the presence of unseen forces. But in this instance, there are two ways to make theoretical predictions about this property of the muon. One of them is consistent with the Fermilab experiment and the other is far off. Nobody knows which really is right, and an intriguing drama has been unfolding over the last few years with no clear resolution in sight.

g minus 2

The muon is an elementary particle that mimics the electron in every trait except for being 207-times heavier. Discovered in 1936 in cosmic rays, its place in the pattern of the Standard Model was, and still is, something of a mystery, prompting physicist Isidor Rabi to remark: “Who ordered that?”

The muon carries non-zero quantum spin, which means it functions like a petite magnet. The strength of this magnet, called the magnetic moment, is captured by a quantity called the g factor. In a high-school calculation, g would be exactly 2, but in advanced theory its value drifts a tiny bit from 2 due to quantum field effects. It is this so-called anomalous magnetic moment that the Muon g -2 Experiment painstakingly ascertained.

Measurements of the g-2 of the muon were first made at CERN in Europe and the results were published in 1961 with a definition of 4000 ppm. Over the next two decades at CERN, the precision was improved to 7 ppm. Matters took an interesting trans-Atlantic turn when the Muon E821 experiment at the Brookhaven National Lab in the US took data between 1997 and 2001 and achieved a precision of 0.540 ppm, which was similar to the uncertainty in the theoretical prediction. In other words, the two numbers — the theoretical calculation and the observed value — could be meaningfully compared.

And lo and behold, they significantly disagreed.

Contrast with theory

Nothing seemed to have been amiss in the experiment, so many physicists wagered that the disagreement was a hint of ‘new physics’. Numerous explanations involving theories beyond the Standard Model poured into the literature over the next two decades. At the same time, the theoretical physicists themselves got down to refining the Standard Model prediction itself, which was no mean task.

So stood affairs until Fermilab started measuring g-2 in 2017. When it had collected just 6% of the total intended data by 2021, it had already reached a precision of 0.460 ppm, comparable to E821. This first result was in such excellent agreement with E821 data that when the two results were combined, the discrepancy with theory deepened to worrying levels.

But the spectacle didn’t end there. On the very day that Fermilab announced this result to much fanfare, there quietly appeared in Nature a new paper in which a group of physicists, called the Budapest-Marseille-Wuppertal (BMW) Lattice collaboration, argued there may be no gap between theory and experiment values after all.

Theorists compute the muon g-2 using either (i) Feynman diagrams, a tool that has served calculations in quantum field theory for three quarters of a century, or (ii) the so-called lattice, a supercomputer simulation of spacetime as a discretised grid that represents quantum fields. Both approaches are technically very challenging in this context.

The final results at Fermilab from June are less confusing. They are consistent with their past announcements; it’s their contrast with theory that is unsettled.

An old friend

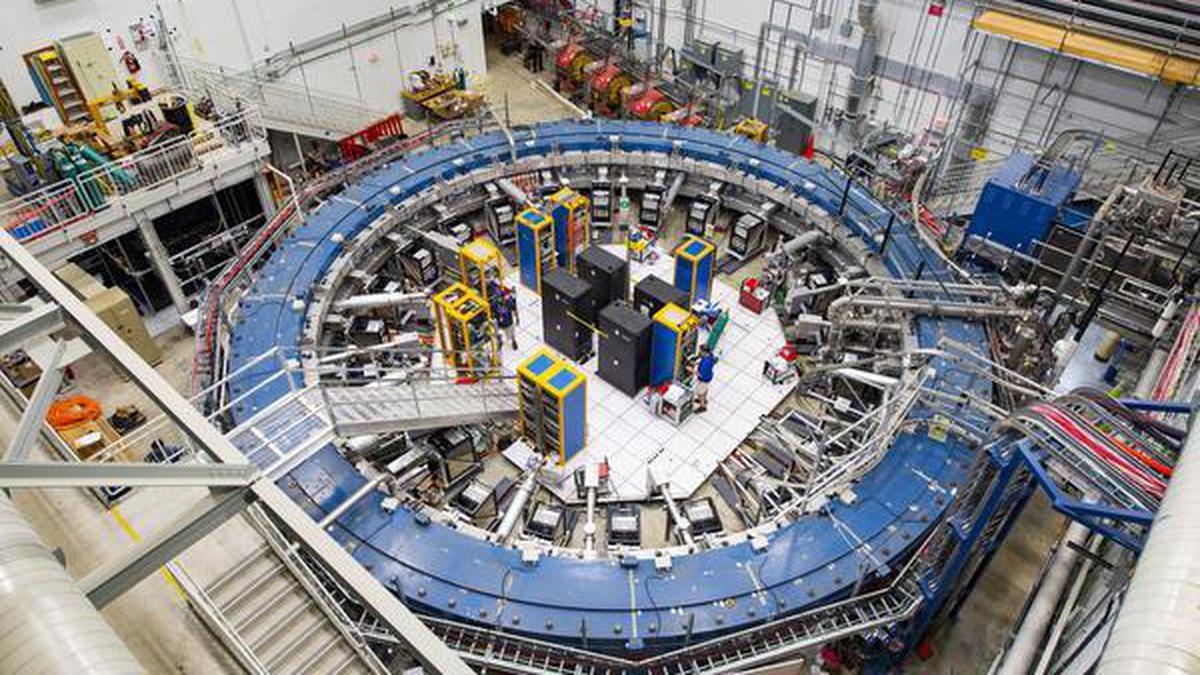

The experimental setup itself was ingenious. A beam of anti-muons is injected into a 15-m-wise ring with a uniform magnetic field. There the antiparticles make circular orbits with a characteristic frequency. Meanwhile, the antiparticles’ spin vectors — a fundamental property of theirs — rotate in the magnetic field and they resemble spinning tops, spinning with a certain spin frequency. The central conceit is to measure the difference between the frequency of the circular orbits and the spin frequencies. This difference carries direct information on the muon’s g-2 value.

Both E821 and Fermilab operated on this principle. This is not impossible. Fermilab reused part of the E821 equipment and thus some unknown defects may have made their presence felt in the Fermilab data as well. This is why it is crucial to have a completely independent measurement by a different experimental approach. An upcoming effort at the Japan Proton Accelerator Research Complex will do just this.

Uncertainty is an old friend of fundamental physics. It has always borne the promise of an imminent disclosure of a deep secret of nature. We wait now for the next word from theorists and hope that the jury will soon be in.

Nirmal Raj is an assistant professor of theoretical physics at the Centre for High Energy Physics in the Indian Institute of Science, Bengaluru.

Published – August 13, 2025 05:30 am IST